How will OSFI regulate AI in Canadian financial institutions? The Office of the Superintendent of Financial Institutions (OSFI) has taken notice of the AI revolution sweeping through Canada’s financial sector. With AI adoption in financial institutions projected to reach 70% by 2026, up from 50% in 2023, regulators are gearing up to address the unique challenges and risks this technology presents.

As AI systems become more deeply integrated into critical financial operations, from credit decisions to risk management, financial executives are faced with a pressing question: How will regulatory expectations evolve to keep pace with this rapid technological change?

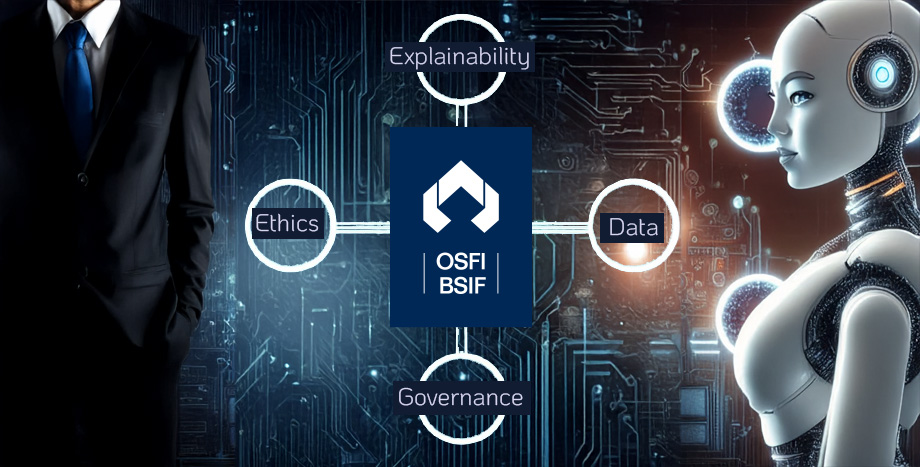

This article examines the emerging regulatory landscape for AI in Canadian financial institutions. We’ll explore OSFI’s recent findings, key risk areas identified by regulators, and the EDGE principles (Explainability, Data, Governance, Ethics) that are shaping the regulatory approach. By understanding these trends, financial leaders can proactively align their AI strategies with evolving regulatory expectations, ensuring both innovation and compliance in this new era of AI-driven finance.

OSFI and FCAC’s Recent Findings

The rapid increase in AI adoption within Canadian financial institutions has not gone unnoticed by regulators. OSFI, in collaboration with the Financial Consumer Agency of Canada (FCAC), has been closely monitoring this trend, and their findings are both illuminating and consequential for the industry.

1. Accelerating Adoption Rates:

The jump from 30% AI adoption in 2019 to 50% in 2023 represents more than just numbers; it signifies a fundamental shift in how financial institutions operate. This acceleration has caught the attention of regulators, prompting a more urgent need for guidance and oversight. For instance, OSFI’s recent technology risk bulletins have increasingly focused on AI and machine learning applications in financial services.

2. Projected Growth:

With AI adoption expected to reach 70% by 2026, OSFI is anticipating a financial landscape where AI is not just a competitive advantage but a standard operational tool. This projection is driving regulatory thinking towards more comprehensive and forward-looking frameworks. The recent joint OSFI-Bank of Canada report on “Third Party Risk Management” touched upon the increasing reliance on AI vendors, signaling a broader regulatory focus on AI ecosystems.

These findings underscore a critical point for financial executives: the regulatory landscape is not just reacting to current AI adoption but is actively preparing for a future where AI is ubiquitous in financial services.

Key Risk Areas Identified

As AI becomes more prevalent, OSFI has identified several key risk areas that financial institutions must address:

1. Business and Strategic Risks:

- Competitive Pressures: The rush to adopt AI could lead to hasty implementations without proper risk assessments. OSFI is concerned about the potential for “AI arms races” in the financial sector, where institutions prioritize speed over safety.

- Over-reliance on AI: There’s a risk of excessive dependence on AI systems without adequate human oversight. OSFI has emphasized the importance of maintaining human judgment in critical decision-making processes.

- Market Concentration: Smaller institutions might struggle to keep pace with AI advancements, potentially leading to market concentration. OSFI is likely to scrutinize how AI adoption affects market dynamics and competition.

2. Data Management and Privacy Concerns:

- Data Protection: As AI systems process vast amounts of sensitive financial data, ensuring compliance with data protection laws is paramount. OSFI is expected to align its guidance with existing frameworks like PIPEDA.

- Data Breaches: The increased use of AI amplifies the potential impact of data breaches. OSFI may require enhanced cybersecurity measures specifically tailored to AI systems.

- Third-Party Data Usage: The use of external data sources and AI vendors introduces new risks. OSFI’s focus on third-party risk management is likely to extend more explicitly to AI-related partnerships.

3. Ethical Considerations in AI Decision-Making:

- Fairness and Bias: Ensuring AI systems don’t perpetuate or exacerbate existing biases in financial services is a top concern. OSFI may require regular audits of AI models for fairness.

- Transparency: The “black box” nature of some AI algorithms is problematic from a regulatory standpoint. OSFI is likely to push for greater explainability in AI-driven decisions.

- Societal Impact: OSFI is considering the broader implications of AI in finance, such as its potential to widen or narrow financial inclusion gaps.

Regulatory Framework: The EDGE Principles

In response to these challenges, OSFI is developing a regulatory approach based on the EDGE principles: Explainability, Data, Governance, and Ethics. These principles are expected to form the backbone of future AI regulations in Canadian financial services:

1. Explainability:

- OSFI is likely to require that financial institutions can clearly explain how their AI models arrive at decisions, especially those affecting customers directly.

- This may involve developing more interpretable AI models or creating robust explanation systems for complex models.

- Example: A bank using AI for credit decisions might need to provide clear, understandable reasons for loan approvals or denials to both customers and regulators.

2. Data:

- Emphasis will be placed on data quality, relevance, and representativeness in AI models.

- OSFI may require regular data audits and the implementation of data governance frameworks specific to AI applications.

- Financial institutions might need to demonstrate how they ensure their data is free from biases and representative of their customer base.

3. Governance:

- Clear accountability structures for AI systems will be essential.

- OSFI may require the designation of AI officers or committees responsible for overseeing AI implementations.

- Regular risk assessments and audits of AI models and their impacts will likely become mandatory.

4. Ethics:

- Ensuring AI systems align with ethical standards and societal values will be crucial.

- OSFI may require financial institutions to develop and adhere to ethical AI frameworks.

- This could involve regular ethics reviews of AI applications and their outcomes.

Anticipated Regulatory Focus

As OSFI continues to develop its approach to AI regulation, financial executives should anticipate:

1. Dynamic and Responsive Risk Management Systems:

- OSFI is likely to require ongoing monitoring and adjustment of AI systems, not just initial compliance checks.

- This may involve real-time monitoring capabilities and the ability to quickly adjust or shut down AI systems if issues are detected.

- Financial institutions might need to develop “AI incident response plans” similar to cybersecurity incident plans.

2. Development of Specific Best Practices:

- OSFI may issue detailed guidelines on AI implementation for different financial sectors (banking, insurance, investment).

- These guidelines could cover areas like model validation, data management, and ethical AI use.

- OSFI might collaborate with industry leaders to develop practical, effective AI governance standards, possibly through pilot programs or sandbox initiatives.

3. Enhanced Reporting and Disclosure:

- Financial institutions may be required to provide more detailed reporting on their AI systems, including performance metrics, risk assessments, and ethical evaluations.

- Public disclosures about AI use and its impact on customers might become mandatory, enhancing transparency and trust.

4. Cross-Border Collaboration:

- Given the global nature of finance and AI, OSFI is likely to collaborate with international regulators to develop harmonized approaches to AI governance.

- This could lead to the adoption of global standards or principles for AI in financial services.

In conclusion, as AI becomes increasingly central to financial services in Canada, OSFI’s regulatory approach is evolving to ensure that innovation doesn’t come at the cost of stability, fairness, or consumer protection. Financial executives must stay ahead of these regulatory trends, not just to ensure compliance but to build AI strategies that are robust, ethical, and aligned with both regulatory expectations and business objectives. By embracing the EDGE principles and anticipating future regulatory focus areas, Canadian financial institutions can position themselves as responsible leaders in the AI-driven future of finance.

Avato specializes in solving complex integration challenges for financial institutions in Canada, with a focus on credit unions, aggregators and FinTech partners. Contact us to learn more about the Avato® Modernization Platform and how it can help you prepare your systems and data for AI, compliance and modernization.