Overview

Creating effective data sources for integration is essential for any organization aiming to harness the power of their data. We understand that this process involves not only comprehending the origins of information but also ensuring its quality and establishing secure connections tailored to our specific needs. A methodical approach is crucial; steps like:

- Information mapping

- Quality assurance

These enhance decision-making and compliance while simultaneously addressing the challenges of merging diverse data formats. What’s holding your team back from achieving seamless integration? We invite you to explore how our solutions can streamline this process and deliver significant value to your organization.

Introduction

In today’s fast-paced world of data management, the integration of diverse data sources stands as a fundamental pillar for organizations like ours, seeking to unlock the true power of information. Whether it’s databases, APIs, or cloud storage, a deep understanding of these sources is crucial for effective integration.

We explore the importance of data sources, providing a step-by-step guide to their creation along with best practices for overcoming integration challenges.

By prioritizing data quality, standardization, and security, we can significantly enhance our operational efficiency and fully realize the potential of our data assets, paving the way for informed decision-making and strategic growth.

What’s holding your team back from achieving this potential?

Understand Data Sources: Definition and Importance in Integration

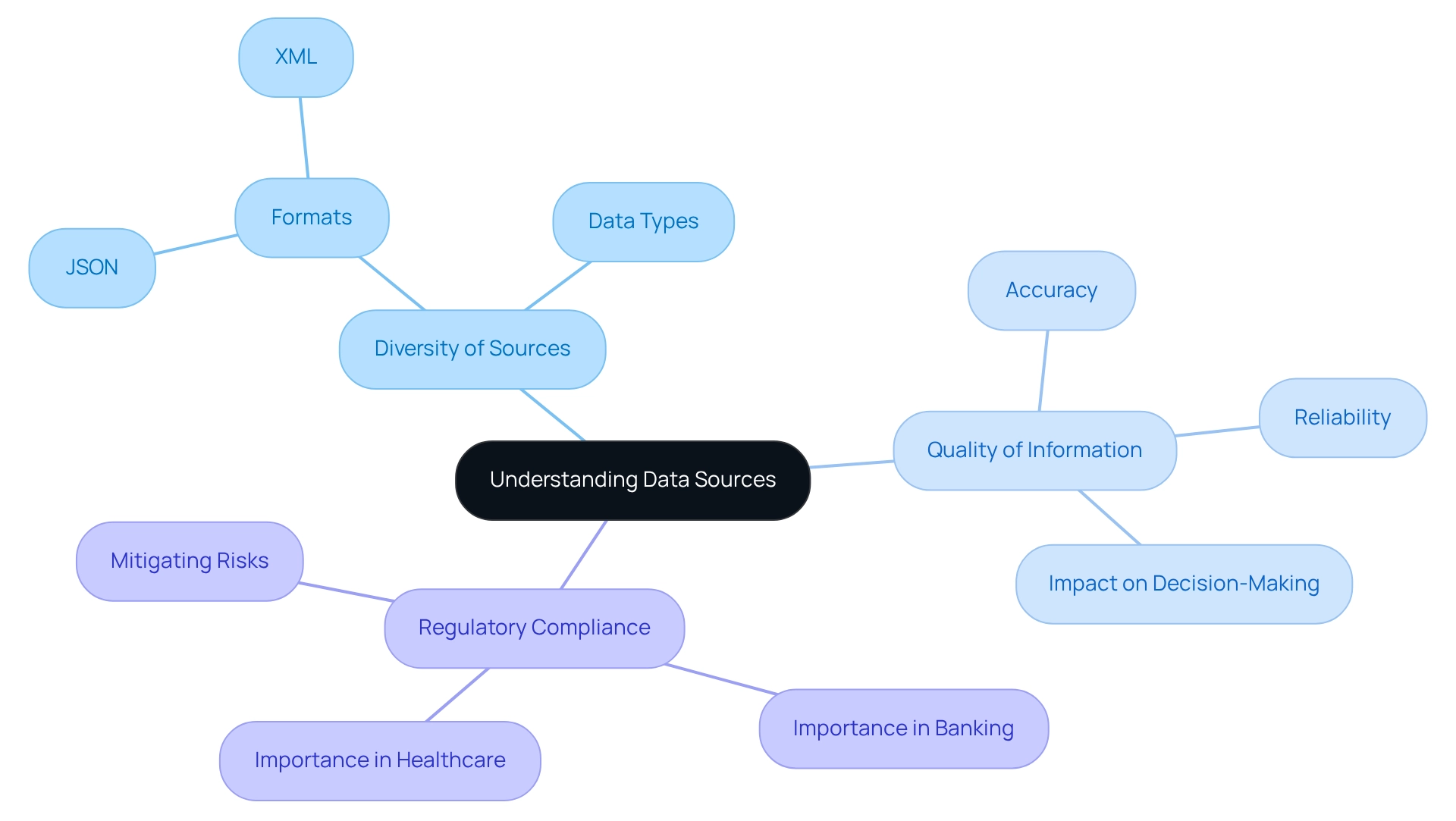

Understanding the origins of information is crucial for creating data source foundations that facilitate effective merging processes, including databases, APIs, flat files, and cloud storage. We recognize the importance of identifying various data sources for several compelling reasons:

- Diversity of Sources: Different data sources present a range of formats, structures, and data types, which directly influence our integration approach and the overall success of our projects. For example, while JSON is often favored for its lightweight structure, XML is preferred for its comprehensive markup capabilities, which can be advantageous in specific contexts.

- Quality of Information: The reliability and accuracy of the sourced information are paramount, as they significantly impact the quality of insights derived from our integrated data. High-quality information not only enhances decision-making but also boosts operational efficiency.

- Regulatory Compliance: In highly regulated sectors such as banking and healthcare, a thorough understanding of information origins is essential for ensuring compliance with stringent regulations surrounding information management and privacy. This adherence not only mitigates risks but also fosters trust among stakeholders.

As Vik Paruchuri, creator of Dataquest, emphasizes, “Aspiring analytics professionals should take charge of their own education and explore subjects that captivate them.” This underscores the significance of creating data source comprehension in our unification efforts. By identifying these elements, we empower organizations to make informed decisions about creating data sources to incorporate, ultimately strengthening their analytics-driven strategies and improving integration success rates. For instance, the application of a dual frame design in health surveys illustrates how diverse information can enhance the representativeness of health-related findings, ensuring insights accurately reflect the entire population. Furthermore, the potential for creating a data source from various origins highlights the necessity for meticulous planning and expertise in managing diverse information for amalgamation. As we advance through 2025, the importance of creating data source understanding in unification processes continues to grow, highlighting the need for organizations to prioritize information quality and source diversity in their integration efforts.

Create Data Sources: Step-by-Step Guide for Implementation

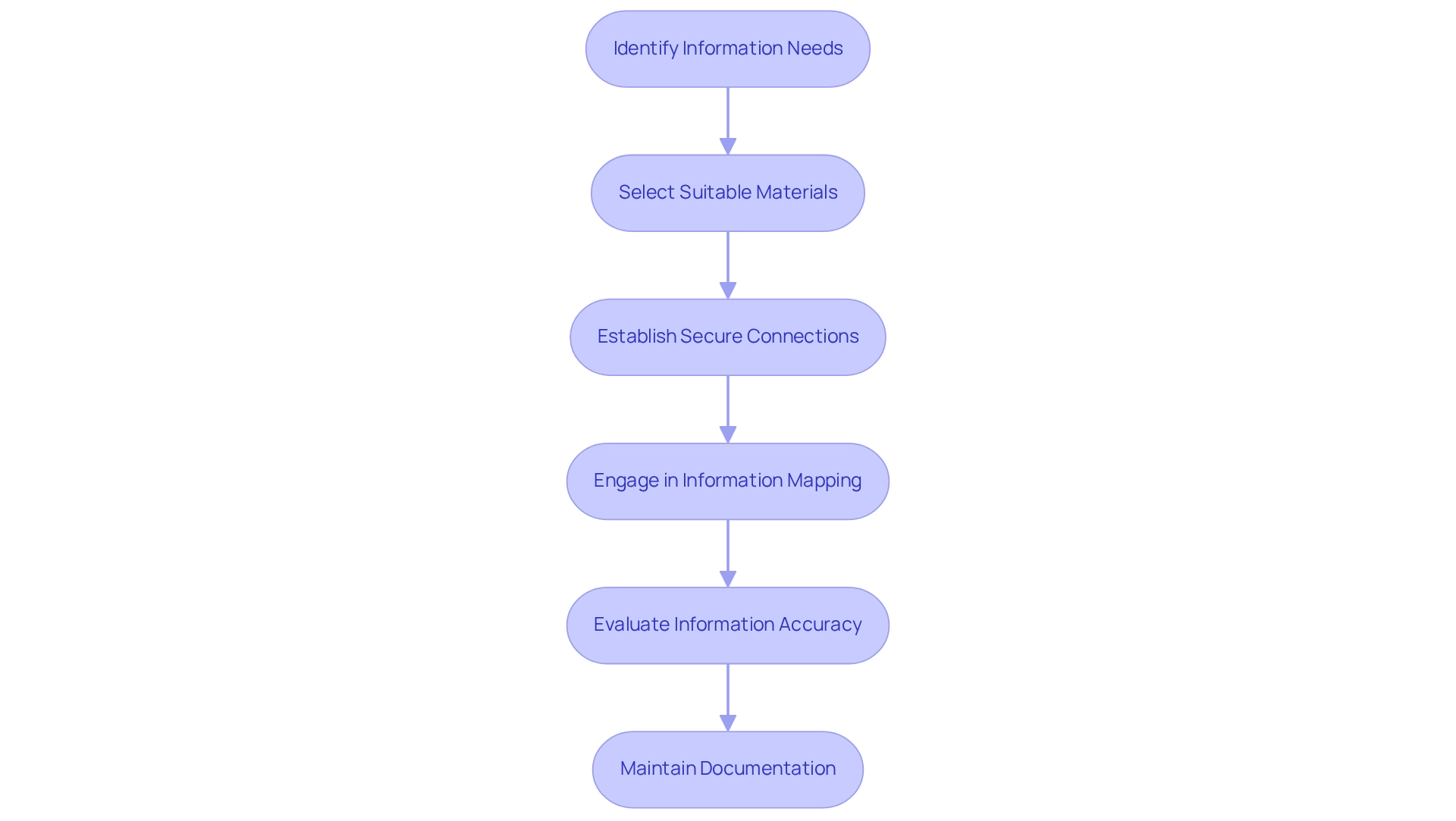

Creating data source for efficient unification is pivotal for our merging projects. We must first identify our specific information needs, which includes understanding the types of information required, the expected volume, and the frequency of updates necessary to ensure the system’s relevance and efficiency.

Next, we select suitable materials by choosing information providers that align with our identified requirements. We assess factors such as information quality, accessibility, regulatory compliance, and the potential for integration with existing systems. Furthermore, we establish secure connections using connection tools or APIs to link to our chosen information sources. It is crucial to ensure that these connections are secure and reliable, safeguarding sensitive information throughout the integration process. Integrating the Fives Safes Framework can assist us in evaluating and articulating disclosure risk qualitatively, ensuring compliance and security in information management.

We then engage in information mapping, carefully aligning the fields from the source to the target system. This step is essential for ensuring precise information transfer and utilization, preventing discrepancies that could influence subsequent processes. Utilizing XSLT simplifies this process, enabling efficient transformation of XML formats and decreasing the effort involved in mapping.

Our comprehensive evaluation phase confirms that information is being accurately retrieved from the origins. We assess information accuracy and thoroughness to ensure that the merging operates as intended. Employing schemas during this phase helps catch programming errors early, leading to substantial cost savings and enhanced reliability.

Finally, we maintain comprehensive documentation of information origins, connection methods, and any transformations applied. This practice aids future upkeep and problem-solving, improving the durability and dependability of our integration efforts through creating data sources that are ready for smooth integration, ultimately promoting operational efficiency and innovation. As indicated by ABS, the Fives Safes Framework adopts a multi-dimensional strategy for managing disclosure risk, which is crucial for custodians in the banking sector. This framework enables effective management and mitigation of risks related to information access, ensuring compliance with privacy policies and enhancing overall security. We, at Avato, are deeply committed to designing technology solutions that support these initiatives, allowing businesses to extract value from their information assets.

Navigate Challenges: Best Practices for Effective Data Source Integration

Combining information sources presents a significant challenge that we must address. To navigate these difficulties effectively, we recommend the following best practices:

-

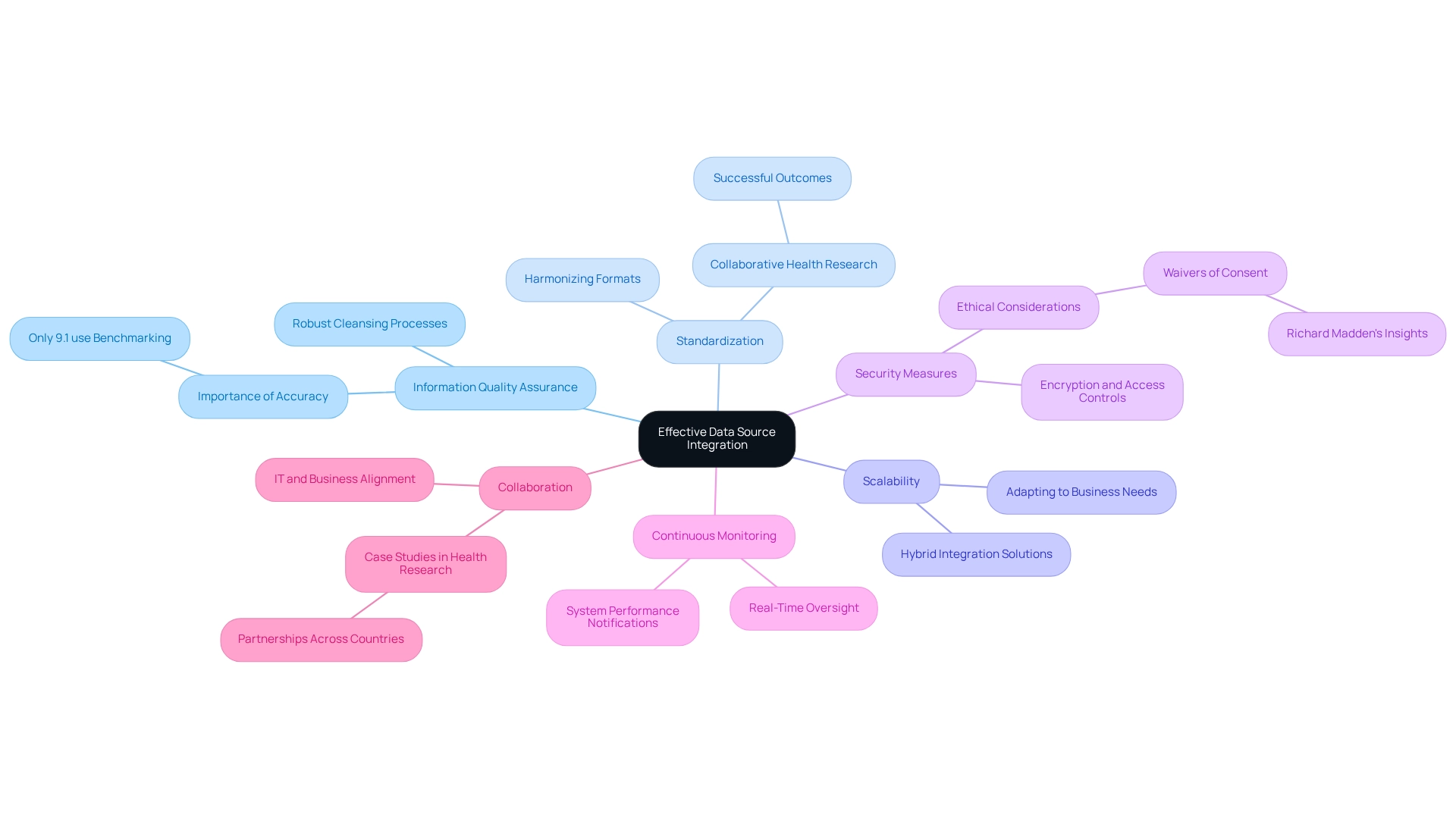

Information Quality Assurance: We must implement robust cleansing processes to ensure the accuracy and reliability of our integrated information. Consistent oversight of information integrity is crucial to avoid issues that could jeopardize merging results. As we look to 2025, the emphasis on information accuracy assurance remains essential; studies indicate that only 9.1% of organizations employ benchmarking strategies to enhance information integrity. This statistic underscores the necessity for us to adopt more stringent quality measures to improve merging success.

-

Standardization: By harmonizing formats and structures across various sources, we enable smoother merging, significantly reducing the complexity of mapping. Effective standardization methods in our information unification initiatives demonstrate how consistency can enhance efficiency. For instance, collaborative efforts in health research have shown that uniform information practices lead to more successful outcomes, highlighting the importance of consistency in our information management.

-

Scalability: We should choose unification solutions, such as Avato’s Hybrid Integration Platform, that can grow alongside our organization. This ensures that as information volumes increase, the unification process remains efficient and effective, adapting to evolving business requirements while maximizing the value of legacy systems and liberating isolated assets.

-

Security Measures: We must prioritize security by implementing encryption and access controls to protect sensitive information during unification. This is particularly critical in sectors like banking and healthcare, where information integrity and confidentiality are paramount. Avato’s secure hybrid connection platform ensures 24/7 uptime and reliability, addressing the ethical dimensions of information management, including the necessity for waivers of consent in connection projects, as highlighted by Richard Madden.

-

Continuous Monitoring: We should implement real-time oversight of information flows to quickly identify and resolve any issues that arise during connection. Ongoing supervision helps maintain information quality and operational effectiveness, a vital feature of Avato’s platform that provides notifications regarding system performance.

-

Collaboration: We encourage teamwork between our IT and business divisions to ensure that collaborative efforts align with organizational objectives and user needs. This collaborative approach can enhance the efficiency of our development projects, as evidenced by case studies that showcase successful partnerships in health research. Such collaborations not only improve project outcomes but also ensure that diverse perspectives are included in the integration process, particularly when creating data source connections. By adhering to these best practices, we can enhance our data integration efforts and effectively mitigate common challenges, ensuring a seamless connection between legacy systems and modern data sources.

Conclusion

Understanding and effectively managing diverse data sources is paramount for organizations like ours that aim to harness the full potential of our data assets. The exploration of data sources reveals their critical role in ensuring high-quality, reliable, and compliant data integration. By recognizing the importance of selecting appropriate sources, establishing secure connections, and maintaining rigorous data quality standards, we can enhance our operational efficiency and decision-making capabilities.

Implementing a structured approach to creating data sources, as outlined in our step-by-step guide, empowers us to navigate the complexities of integration. From identifying data requirements to thorough testing and documentation, each step is designed to ensure a seamless integration process that meets our evolving business needs. The emphasis on best practices, such as standardization, scalability, security measures, and continuous monitoring, provides a robust framework for overcoming challenges that may arise during our integration efforts.

Ultimately, our commitment to understanding data sources and adhering to best practices not only enhances integration success rates but also positions us for strategic growth in an increasingly data-driven landscape. By prioritizing these elements, we can unlock valuable insights and drive informed decision-making, paving the way for a future where data serves as a powerful catalyst for innovation and success. What steps will we take to leverage these insights and fully realize the potential of data integration?