Overview

The article delves into the critical components of an ingestion pipeline, which are indispensable for the effective gathering, processing, and utilization of data from diverse sources. Why is this important? These components—data sources, ingestion methods, transformation processes, storage solutions, and monitoring practices—are essential for enhancing data quality and reliability. By implementing these elements, organizations can make informed decisions based on accurate insights. Furthermore, the integration of a robust ingestion pipeline empowers businesses to harness the full potential of their data, driving strategic growth and operational efficiency.

Introduction

In an increasingly data-driven world, the significance of ingestion pipelines is paramount. These sophisticated systems are essential for collecting, processing, and integrating data from diverse sources into centralized repositories, enabling organizations to harness their data assets effectively.

As businesses confront the challenges of managing various data types and ensuring quality, understanding the complexities of ingestion pipelines becomes crucial. How can organizations identify key data sources and select appropriate storage solutions? Navigating this intricate landscape is vital for optimizing data strategies.

Furthermore, with the rise of real-time analytics and the necessity for seamless data flow, the role of ingestion pipelines is poised to evolve, driving innovation and operational efficiency across industries.

This article delves into the critical components of ingestion pipelines, exploring their significance, methods, and best practices for successful implementation.

Understanding Ingestion Pipelines: A Comprehensive Overview

An ingestion pipeline is a meticulously designed system that gathers, processes, and imports data from various sources into a centralized storage or processing location. This pipeline serves as the backbone of information integration, empowering organizations to effectively manage and utilize their assets. Ingestion pipelines are indispensable tools in today’s information landscape, adept at handling a diverse array of information types, including structured, semi-structured, and unstructured formats.

The significance of data pipelines cannot be overstated, particularly as we approach 2025, when organizations increasingly depend on data-driven decision-making. Recent statistics reveal that 35% of challenges encountered during information integration arise at the cleaning phase, while 34% occur during the input stage. This underscores the critical role these pipelines play in ensuring quality and reliability. Moreover, a substantial number of organizations now integrate ingestion pipelines into their information management strategies, highlighting their growing importance in the sector.

Additionally, Michael Felderer remarks, ‘This study establishes the foundation for upcoming investigations into pipeline quality,’ emphasizing the necessity for robust intake pipelines to uphold high information standards.

These pipelines ensure that information flows seamlessly from its origin to its destination, making it readily available for analysis and informed decision-making. By automating aspects of the information acquisition process, DataOps fosters collaboration between information engineers and users, further enhancing the effectiveness of management practices.

Real-world applications of the ingestion pipeline demonstrate its efficiency in streamlining complex information unification projects. For instance, the hybrid unification platform has been recognized for its ability to simplify the connection of isolated legacy systems and fragmented information, enabling organizations to modernize operations swiftly while minimizing downtime. The platform provides real-time monitoring and alerts on system performance, significantly reducing costs and allowing organizations to access information and systems in weeks, not months.

This capability is crucial for sectors such as banking and healthcare, where secure and trustworthy information merging is paramount. Avato’s platform specifically addresses the challenges faced by banking IT managers by offering a secure and efficient means to integrate legacy systems, thereby enhancing operational capabilities and cutting costs.

In summary, ingestion pipelines are vital components of information unification, providing the necessary infrastructure for organizations to harness the full potential of their information. As the landscape of information management continues to evolve, the role of ingestion pipelines will grow increasingly significant, enhancing efficiency and innovation across various sectors. Current studies, including those conducted by Ph.D. candidates Harald Foidl and Valentina Golendukhina, further emphasize the importance of deepening our understanding of data-intensive systems and their integration.

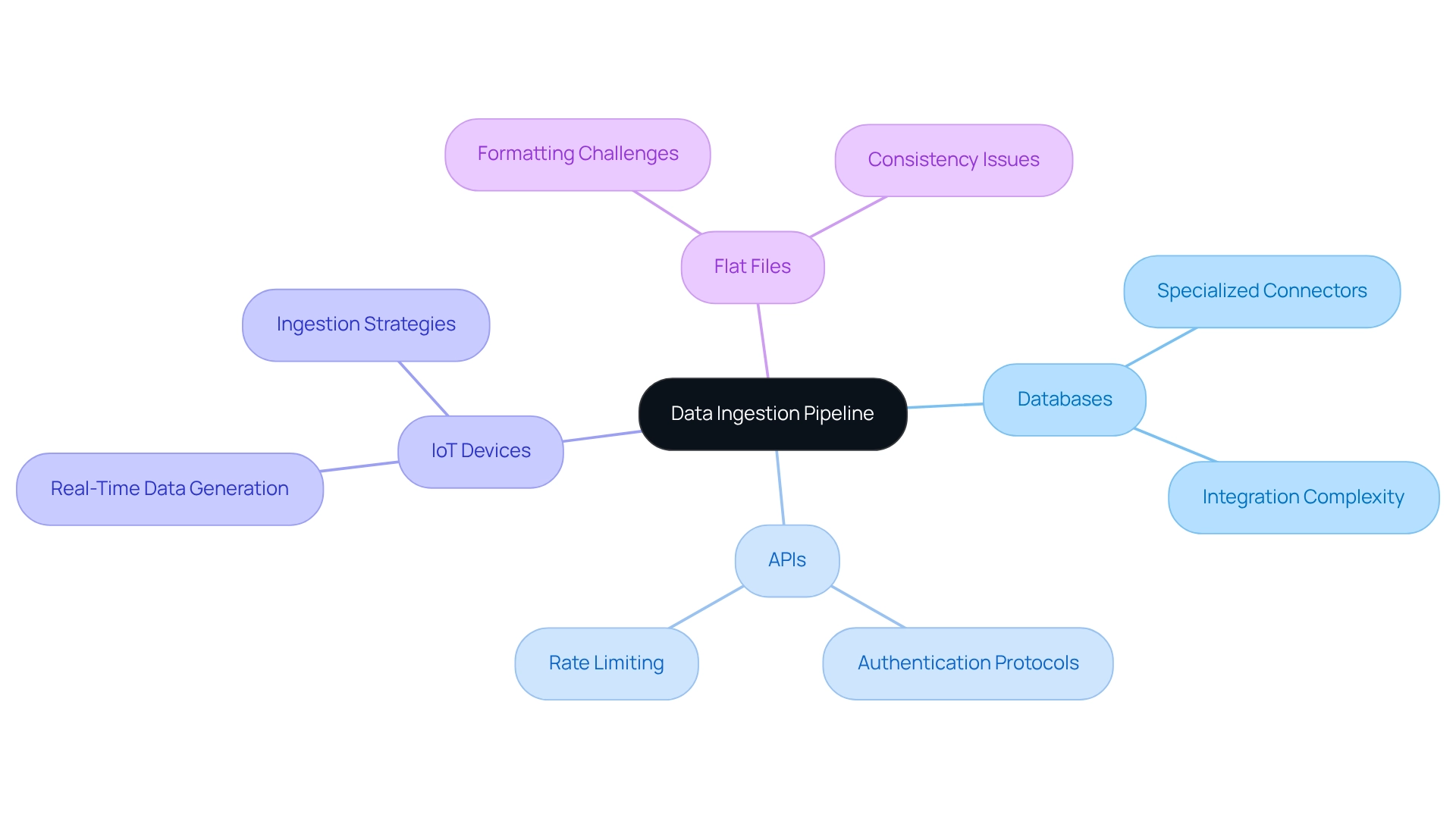

Identifying Key Data Sources for Effective Ingestion

The ingestion pipeline is pivotal, incorporating key information sources that span diverse formats, including databases, APIs, IoT devices, and flat files. Each source type presents unique challenges and opportunities that organizations must adeptly navigate. For instance, databases often require specialized connectors for efficient information extraction, complicating integration efforts.

APIs introduce considerations such as authentication protocols and rate limiting, which can obstruct seamless information flow if not meticulously managed. Understanding the distinctive characteristics of each information source is crucial for developing robust ingestion pipelines capable of accommodating various input types. IoT devices, for example, generate vast amounts of real-time data, necessitating effective ingestion strategies to ensure timely processing and analysis. Additionally, while flat files may seem simpler, they can pose challenges related to formatting and consistency.

The hybrid connection platform is essential for ensuring quality at the source, significantly enhancing the overall integrity of the information pipeline. Leveraging Avato’s solutions allows organizations to proactively tackle compatibility issues, which statistics indicate affect 8% of posts on Stack Overflow, particularly during different phases of information pipelines. For banking IT managers, resolving these compatibility issues is vital to avert operational delays and rising costs.

Practical case studies illustrate the impact of efficient source unification. For instance, banks can harness real-time analytics similar to those utilized by telecommunications firms to refine their customer engagement strategies by integrating client information streams. This capability not only boosts operational efficiency but also reveals new revenue opportunities through timely insights.

As Gustavo Estrada noted, “Avato has the capability to simplify intricate projects and provide outcomes within preferred timelines and budget limitations,” a sentiment particularly relevant for banking IT managers facing unification challenges. By focusing on these critical information sources and their associated challenges, organizations can enhance their ingestion pipelines to meet the demands of 2025 and beyond, ultimately fortifying their operations through seamless information and system integration.

Exploring Ingestion Methods: Batch vs. Real-Time

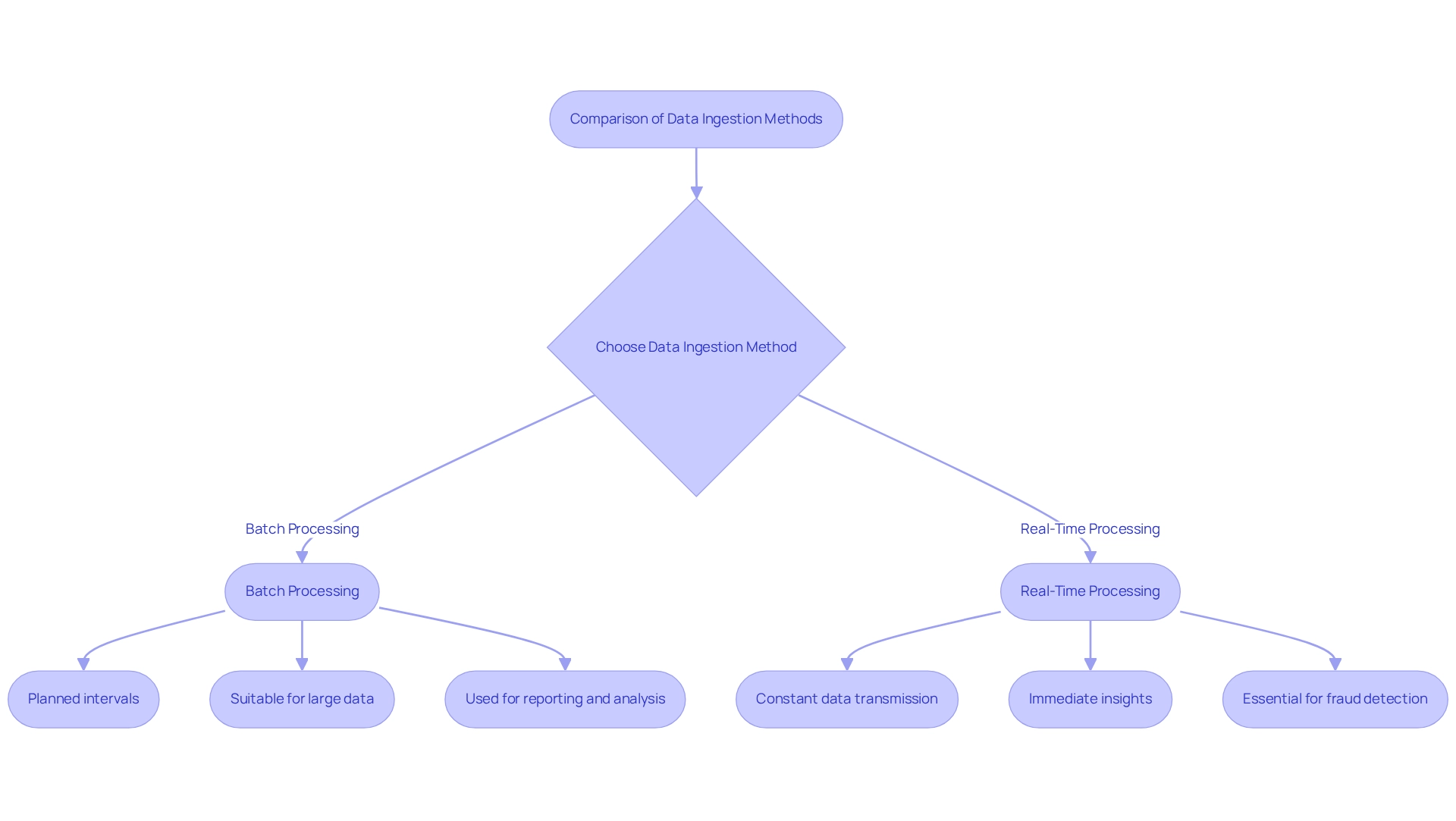

Data intake methods can be broadly categorized into batch and real-time (streaming) processes within an ingestion pipeline, each serving distinct purposes based on organizational needs. Batch collection entails gathering and processing information at planned intervals, making it suitable for managing large amounts of information that do not necessitate prompt processing. This method is frequently utilized for tasks such as end-of-day reporting or historical information analysis, where timely insights are less critical.

On the other hand, real-time processing constantly transmits information as it is created, allowing organizations to obtain instant insights and take swift actions. This method is especially beneficial for financial organizations, where the capacity to handle transaction information in real-time is essential for tasks such as fraud detection and risk management. For instance, banks utilizing real-time data processing can identify suspicious transactions within seconds, significantly reducing potential losses.

Statistics indicate a growing trend in the adoption of real-time information intake among financial institutions, with recent studies showing that over 60% of banks are now implementing real-time processing capabilities. This shift reflects a growing awareness of the need for prompt information delivery to enhance customer experiences and operational efficiency. Avato’s hybrid integration platform plays a crucial role in this trend, ensuring 24/7 uptime for critical integrations and offering real-time monitoring and alerts on system performance, which is vital for maintaining the reliability of real-time data processing.

When choosing between batch and real-time processing, organizations must assess their specific needs, including speed and volume. Real-time processing is preferable when immediate insights are necessary, while batch processing may suffice for less time-sensitive information. Comprehending these distinctions is crucial for enhancing information strategies and ensuring that financial institutions can react effectively to market demands.

As we enter 2025, trends suggest that the preference for real-time processing will keep growing, fueled by advancements in technology and the escalating complexity of information environments. Real-world examples of financial institutions successfully implementing real-time data intake highlight its effectiveness in improving operational capabilities and reducing costs. For example, the hybrid connectivity platform developed by the company has been crucial in streamlining complicated connection projects, enabling organizations to reach their ingestion pipeline objectives quickly and effectively.

As Gustavo Estrada, a customer, noted, “The platform has simplified complex projects and delivered results within desired time frames and budget constraints,” underscoring its impact on operational efficiency. Additionally, other clients have reported significant cost reductions and enhanced customer satisfaction due to the seamless integration capabilities provided by arvato.

Data Transformation: Preparing Your Data for Analysis

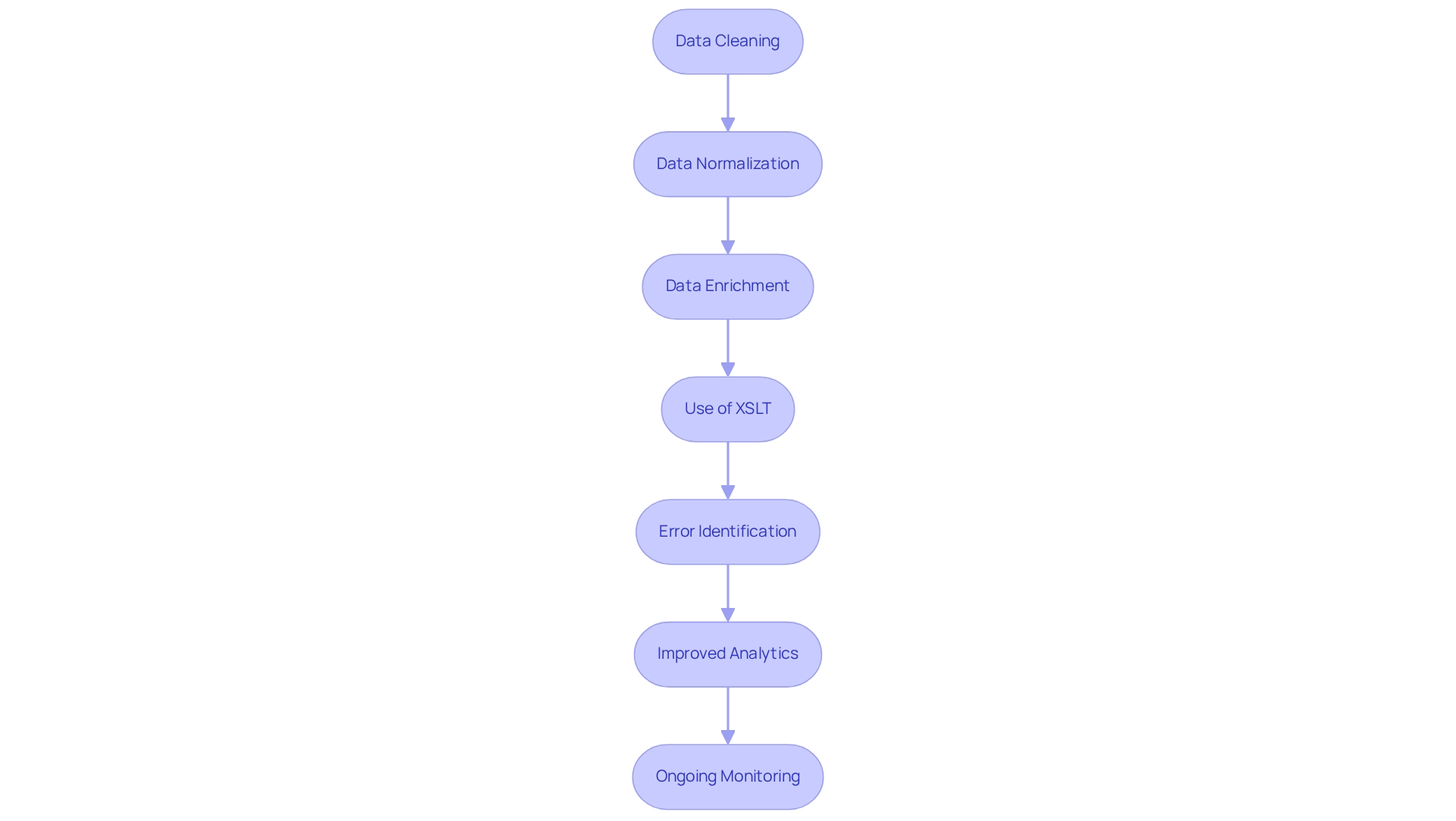

Information transformation is a vital process that entails cleaning, normalizing, and enriching information to prepare it for analysis. This multifaceted approach involves removing duplicates, converting types, and applying specific business rules to ensure consistency across datasets. Effective information transformation is essential for generating precise insights and supporting informed decision-making, particularly in information-intensive sectors.

Leveraging XSLT, a highly-tuned declarative programming language, can significantly enhance the efficiency of XML information transformation. XSLT’s connection with XPath and its extensive library of information manipulation functions enables the automation of transformative tasks, minimizing manual errors and ensuring information integrity. Furthermore, the use of schemas alongside XSLT can identify programming errors early in the development process, resulting in significant cost savings and reducing the risk of mistakes in integration projects.

Consider the healthcare sector: converting patient information to standardize formats not only enhances analytics but also improves reporting capabilities. A robust transformation framework can automate these processes, significantly minimizing manual errors and ensuring information integrity. Statistics show that organizations prioritizing information transformation experience substantial improvements in their analytics results, with many reporting enhanced operational capabilities and decreased costs.

Moreover, ongoing monitoring after transformation is crucial for sustaining information quality. This allows organizations to make informed decisions based on trustworthy information. For example, the platform guarantees 24/7 availability for essential connections and offers real-time monitoring and notifications regarding system performance, showcasing its dependability in preserving information quality post-transformation. This hybrid integration platform simplifies complex integrations, maximizing the value of legacy systems while significantly reducing costs.

By implementing best practices in information transformation, businesses can unlock the full potential of their insights, leading to more effective strategies and enhanced performance across various industries. As Gustavo Estrada, a customer, noted, ‘Avato has simplified complex projects and delivered results within desired time frames and budget constraints.’ This underscores how the company addresses the challenges of integrating disconnected legacy systems and fragmented information, establishing itself as a valuable ally for organizations in the banking sector.

Notably, Avato’s successful implementation of hybrid integration solutions at Coast Capital, which went live in February 2013, exemplifies its expertise in the financial sector, showcasing its ability to handle complex integrations with minimal downtime.

Choosing the Right Storage Solutions for Ingested Data

Choosing the appropriate storage solution for the ingestion pipeline of information is crucial for maintaining both accessibility and integrity. Organizations typically face three primary options: data lakes, data warehouses, and cloud storage solutions.

Data lakes excel at managing vast amounts of unstructured information, making them ideal for scenarios where flexibility and scalability are paramount. Conversely, data warehouses are tailored for structured information and complex analytical queries, delivering optimized performance for business intelligence tasks.

When selecting the best storage solution, organizations must assess various factors, including volume, access patterns, and compliance requirements. For instance, a financial institution may opt for a secure cloud storage solution to meet stringent regulatory demands while ensuring rapid access to critical transaction data. The hybrid integration platform offers a reliable, future-ready technology stack that streamlines the ingestion pipeline across diverse storage solutions, enabling companies to adapt to changing needs and enhance their operational efficiency.

Current trends indicate a shift from traditional batch processing to event-driven architectures, which support continuous information processing and immediate insights. This transformation underscores the need for storage solutions that facilitate real-time information access and analytics—a capability that Avato’s platform effectively delivers, ensuring organizations can leverage their data for swift decision-making.

As enterprises increasingly adopt data lakes and warehouses, statistics reveal that a significant number of organizations are utilizing these technologies to refine their information management strategies. In fact, the world generated an astounding 33 zettabytes of data in 2018, emphasizing the growing importance of effective storage solutions. The company has garnered praise from clients like Gustavo Estrada for its ability to simplify complex project implementations and achieve results within desired timelines and budget constraints, reinforcing the case for selecting the right storage option.

Ultimately, the choice of a storage solution should align with the organization’s specific needs and future growth strategies, ensuring that information remains accessible and usable in an ever-evolving landscape. With the company’s dedicated approach, businesses can navigate the complexities of data management with confidence, fostering value creation. The organization’s commitment to addressing intricate challenges ensures that clients receive optimal support in their integration endeavors.

Monitoring and Maintenance: Ensuring Pipeline Reliability

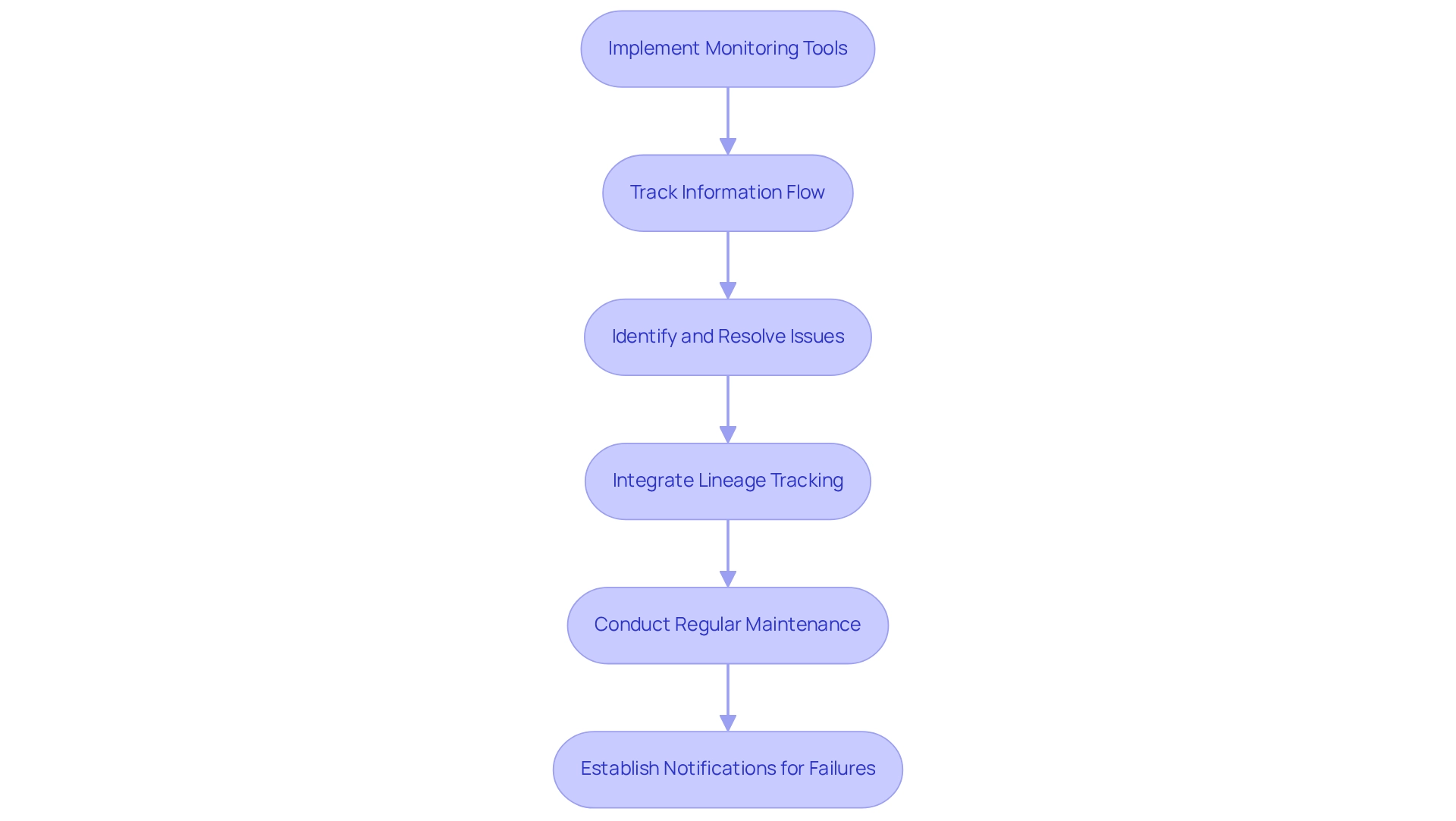

Monitoring and maintenance are critical elements of a successful ingestion pipeline. Organizations must implement robust monitoring tools, such as those offered by Avato, to meticulously track information flow, performance metrics, and error rates. This proactive approach empowers teams to swiftly identify and resolve issues before they escalate, thereby ensuring uninterrupted access to information.

For instance, the integration of lineage tracking with tools like OpenLineage in Airflow not only enhances visibility into data transformations but also simplifies debugging processes, which is vital for compliance and operational efficiency. This aligns with the emphasis on continuous monitoring and optimization in hybrid solutions, designed to provide comprehensive analytics capabilities that bolster operational performance.

Regular maintenance activities, including updating connectors and optimizing performance, are essential for sustaining the efficiency of ingestion pipelines. Establishing notifications for pipeline failures is a best practice that enables teams to respond promptly to disruptions, thereby preserving the integrity of the pipeline. In fact, statistics reveal that organizations prioritizing monitoring and maintenance experience significantly fewer operational challenges, with Avato ensuring 24/7 uptime for critical integrations.

This level of reliability is paramount, particularly in sectors such as banking and healthcare, where information integrity is non-negotiable.

Real-world examples of proactive maintenance underscore its effectiveness. Businesses that routinely assess their intake pipelines and implement necessary updates report enhanced performance and reduced downtime. Insights from structured interviews with data engineers consistently highlight the importance of pipeline reliability, demonstrating that a well-maintained ingestion pipeline not only supports operational objectives but also cultivates trust in data-driven decision-making.

As Gustavo Estrada articulated, the company simplifies complex projects and delivers results within desired time frames and budget constraints, further emphasizing the significance of effective monitoring and maintenance practices. Avato’s commitment to architecting technology solutions originates from its foundation as a collective of enterprise architects dedicated to streamlining integration processes, ensuring that businesses can unlock isolated assets and generate substantial value.

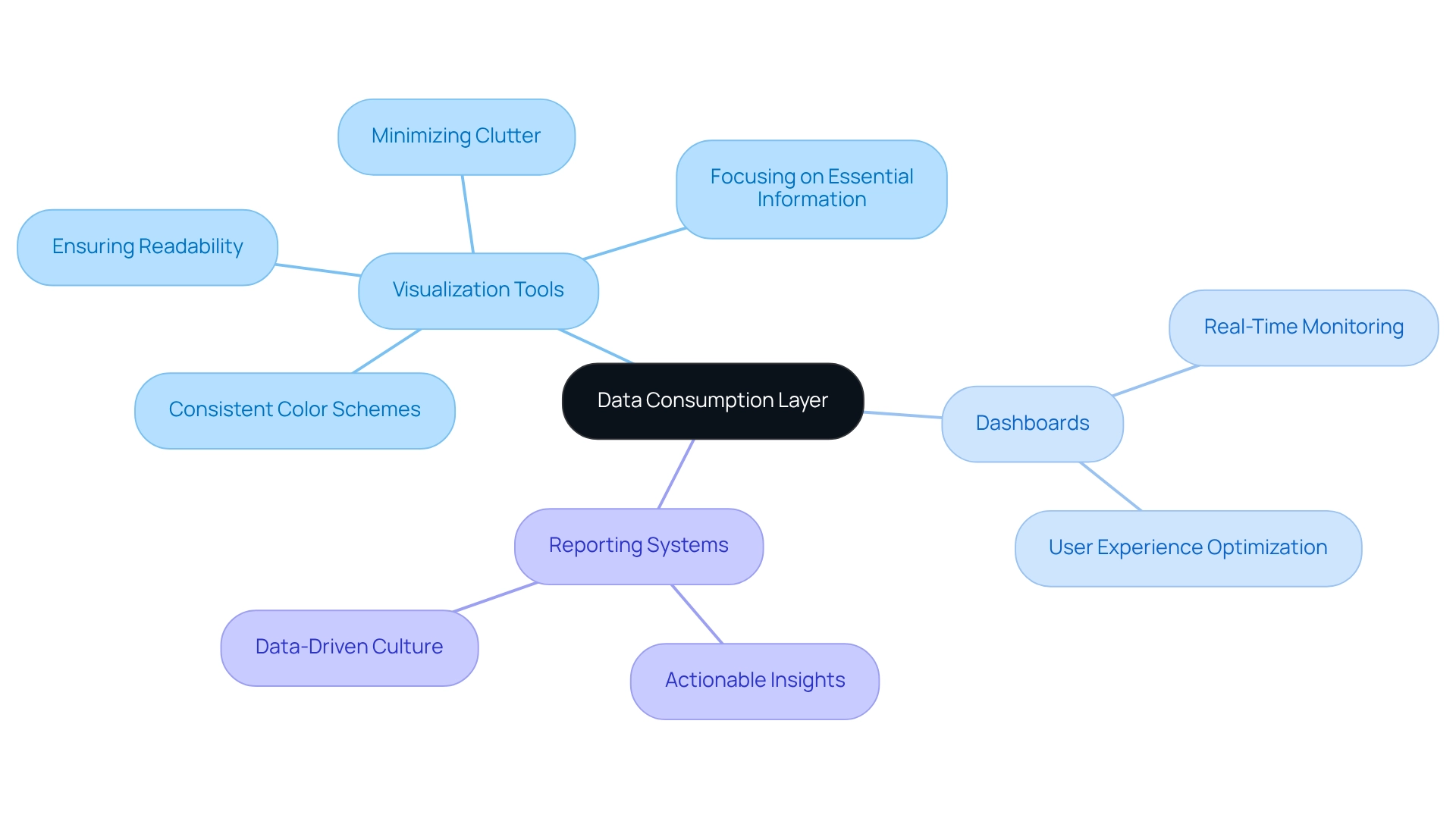

Building the Data Consumption Layer: Making Data Actionable

The information consumption layer represents the final stage of the ingestion pipeline, transforming ingested information into actionable insights for end-users. This essential layer encompasses visualization tools, dashboards, and reporting systems that empower stakeholders to extract meaningful insights from the data. A well-structured consumption layer is crucial for ensuring that users can effortlessly access and interpret information, thereby fostering informed decision-making.

Consider a retail organization that implements a dashboard visualizing sales metrics in real-time. This capability enables swift adjustments to inventory levels and marketing strategies. Such agility is especially vital in today’s fast-paced business environment, where companies with advanced analytical capabilities are twice as likely to rank in the top quartile of financial performance and five times more likely to make quicker decisions.

Emphasizing user experience within the consumption layer is key to optimizing the value derived from information. Effective visual representation methods include:

- Utilizing consistent color schemes

- Minimizing clutter

- Ensuring readability

- Focusing on essential information

By adhering to these principles, organizations can develop user-friendly dashboards that enhance engagement and yield better outcomes.

Furthermore, a market survey revealed that 39% of organizations struggle to cultivate a culture centered on information, often due to challenges in providing relevant information models. This underscores the importance of efficient visualization tools in decision-making processes. As Gustavo Estrada noted, the platform simplifies complex projects and delivers results within specified time frames and budget constraints, highlighting the critical role of efficient information consumption layers.

Avato’s hybrid unification platform not only transforms legacy systems but also empowers businesses to safeguard their operations through seamless information and system integration. This includes features such as real-time monitoring and cost reduction. As organizations navigate the complexities of data integration, the design of the ingestion pipeline will be pivotal in shaping their success.

Conclusion

Ingestion pipelines are fundamental to effective data management, serving as the essential framework for collecting, processing, and integrating data from diverse sources. As organizations increasingly rely on data-driven decision-making, grasping the complexities of these pipelines is vital. This article illustrates how these systems not only enhance data quality but also enable real-time analytics, which is crucial for sectors such as banking and healthcare.

Identifying key data sources and employing suitable ingestion methods—whether batch or real-time—are crucial for optimizing data strategies. By addressing the unique challenges posed by various data formats and ensuring seamless integration, organizations can unlock significant operational efficiencies. Furthermore, data transformation processes are pivotal in preparing data for analysis, underscoring the necessity for robust frameworks that bolster data integrity and reliability.

Choosing the right storage solutions is equally critical, as it impacts both data accessibility and compliance. As organizations navigate the evolving landscape of data management, the focus on monitoring and maintenance ensures that ingestion pipelines remain reliable and efficient. The integration of advanced monitoring tools can significantly reduce operational disruptions, enabling businesses to uphold high standards of data integrity.

Ultimately, constructing a strong data consumption layer converts raw data into actionable insights, empowering organizations to make informed decisions swiftly. By prioritizing user experience in data visualization, businesses can cultivate a data-driven culture that fosters better outcomes. As the role of ingestion pipelines continues to evolve, investing in these essential systems will empower organizations to harness the full potential of their data assets, driving innovation and operational excellence in the years ahead.